AI Tinkerers, Jan 30 2025

Finding good tech meetups in Downtown Toronto has never been easy—especially if you’re avoiding bars. That’s why it was great to see AI Tinkerers bringing the local scene to life with their Jan 30 event at Google’s King Street office.

We kicked things off with a fireside chat with David Fleet from Google DeepMind, led by Tiffany Janzen. David has done some incredible work in computer vision and image generation, and it was a real treat to hear his perspective on how the field has evolved and accelerated.

And then came the demos!

Addy Bhatia (Lazer Technologies): AI Web Scraping with Vision Models - Addy explored how AI-driven tools can transform web scraping using vision-language models and automation.

Addy used Microsoft's OmniParser (which I hadn't heard of and definitely want to play with) in combination with some LLM tech to make an AI agent that could visit web pages, apply filters to searches, and scrape the results. It wasn't the fastest thing in the world, but a fantastic example of how to stitch together existing technologies to compose something novel. We use a variety of RPA tools at work and I'm curious if something like this could bridge any of our (many) gaps.

Someone from Google: Maybe Project Astra? - Okay, confession time: I didn’t catch the speaker’s name, and it’s not on the website. But what I did see was a fascinating demo of Google’s AI capabilities, possibly Project Astra—or something even newer? If you were there and know the details, please get in touch! But it was a cool demonstration across a range of capabilities, all done through voice interaction with a bit of computer vision built in.

My challenge with big tech vendor demos is they can often be mind blowing but there's a part of me that suspects they'll never see broad usage. Google doesn't have the best rep for product development and sustainability (RIP Reader, but remember their cloud gaming platform that made the cover of Edge and Wired?[1]) and Gemini seems amazing but doesn't get nearly as much attention as anyone else, so this might just be a directional signal for what we could be seeing, from someone, in the next five years.

Millin Gabani (Keyflow): WhisperType - Millin demonstrated WhisperType, a Mac app he’d developed that transcribes his voice input using a locally hosted Whisper model. He then used that tool to demonstrate building software via prompts, in a yo dog I heard you liked AI so we put AI in your AI… kind of vibe. I haven’t done a lot of voice-based computing other than some ChatGPT while hiking in the woods to brainstorm ideas, but there’s something compelling about a hands-off keyboard approach to coding that might help me focus more on the outcome and not try to do what’s increasingly the computer’s job.

Interestingly, someone in the audience pointed out that there’s some competition in the market that’s also developed in Toronto. SuperWhisper looks more fully formed (Millin’s app is still in beta) but without putting down the other apps, Millin said that there was nothing out there that fit his personal needs so he built his own. Which is super cool and I hope we’ll see more examples of this as (if? when?) software development gets easier.

WhisperType hasn’t launched yet, but there’s an early access form if you’d like to know more.

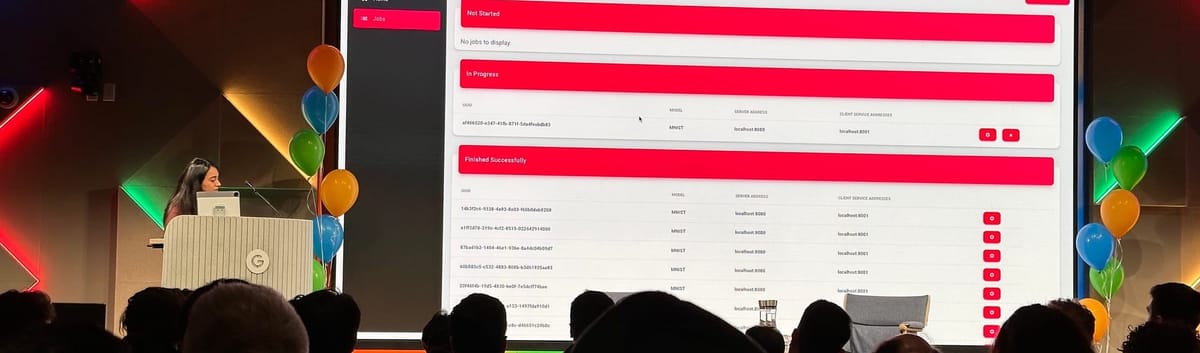

Sana Ayromlou (Vector Institute): FL4Health: Federated Clinical AI - Sana discussed FL4Health, an open-source federated learning engine for healthcare that ensures secure, personalized AI model training while preserving data privacy.

I remember hearing about federated learning several years ago when I was just getting into AI and ML. It’s a cool technology that enables models to be trained across multiple devices or locations without sharing raw data, improving privacy while still allowing a central model to be updated collaboratively. I didn’t have a use case for it, but I’m glad to see it’s still a thing.

FL4Health applies federated learning in a high-stakes environment—healthcare—where data privacy is paramount. Unlike big tech’s centralized models, this approach ensures patient data stays where it belongs while still contributing to better AI-driven insights.

Similar to data clean rooms, getting participation from multiple parties requires some additional technical maturity/sophistication across all participants, but Sana & co’s work looks like it’s including interfaces to help facilitate that.

Bhavya Trivedi (York University): Mobile Pings: Wildfire Prediction AI - Bhavya explored the use of ConvLSTM and crowd-sourced mobile data to predict wildfire spread, showcasing practical AI applications for environmental challenges.

This was more traditional ML/data science, which is really important to see when we’re inundated with LLM news every day. Bhavya used open datasets about California fire department calls to see what he could predict. He was limited by the availability of detailed fire response data, which made predictions more challenging, but it was a great presentation to present a lot of threads for the audience to pull on to enhance their own learning paths.

Like I said at the beginning, I couldn’t remember how I found this group but I really appreciate their approach to building community. We all had to apply to get access to the event, which led to a comprehensive list of profiles that were then shared with attendees to facilitate networking. Ironically, I used this to find people at my own company and rather than reach out at work I was actively stalking nametags during the networking session.

And there were a lot of opportunities to network. We had about half an hour to mingle before things kicked off, and there were a lot of first timers like me who didn’t know anyone there, so it was easy to start a conversation. After the first two presentations we had a one-hour dinner break, which was an amazing idea I hadn’t seen before: we now had things to talk about!

All in all it was a great night, and I’ve got a range of personal and professional followups added to my list. My thanks to The Human Feedback Foundation for putting this together and to Google for hosting. It’s not every day you get to hear from leading AI minds, swap ideas over dinner, and leave with a list of people to follow up with. If this is a glimpse of where Toronto’s AI scene is headed, I’m all in.

Remember magazine covers? ↩︎